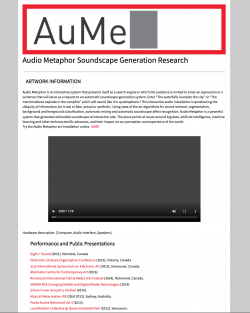

AuMe: Audio Metaphor Soundscape Generation Research

Type

Other

Authors

Thorogood ( Miles Thorogood )

Pasquier ( Philippe Pasquier )

Kranabetter ( Joshua Kranabetter )

Bougueng ( Renaud Bougueng )

Fan ( Jianyu Fan )

Eigenfeldt ( Arne Eigenfeldt )

Category

Website

[ Browse Items ]

URL

[ private ]

Abstract

Audio Metaphor is an interactive system that presents itself as a search engine in which the audience is invited to enter an expression or a sentence that will serve as a request to an automatic soundscape generation system. Enter “The waterfalls inundate the city” or “The marshmallows explode in the campfire” and it will sound like it in quadraphonic! This interactive audio installation is questioning the ubiquity of information, be it real or fake, actual or synthetic. Using state of the art algorithms for sound retrieval, segmentation, background and foreground classification, automatic mixing and automatic soundscape affect recognition. Audio Metaphor is a powerful system that generates believable soundscape at interactive rate. The piece points at issues around big data, artificial intelligence, machine learning and other technoscientific advances, and their impact on our perception and experience of the world.

Audio Metaphor is a pipeline of computational tools for generating artificial soundscapes. The pipeline includes modules for audio file search, segmentation and classification, and mixing. The input for the pipeline is a sentence, a desired duration, and curves for pleasantness and eventfulness. Each module can be used independently, or in unison to generate soundscape from a sentence.

Audio Metaphor is a soundscape generation system that transforms text into soundscapes. A user enters a sentence of a scenario, the desired mood, and duration. Audio Metaphor analyzes this text, selects sounds from a database, cuts these sounds up, and recombines them in a sound design process.

The text analysis identifies key semantic indicators used to search for related sounds either locally or online. The algorithm SLiCE attempts to optimize search results for maximizing the combination of keywords in a result. Sounds returned from the search are cut up based on a perceptual model of background and foreground sound. Each classified segment is then run through a predictive model that applies mood based labels to the sound from a two-dimensional affect space. We developed both these models from human listening experiments aimed at automating this process.

A mixing engine takes labelled sound segments and selects, arranges and mixes into the final soundscape. The engine creates separate tracks for semantic groups returned from the search and the mixing engine inserts corresponding sounds onto these, based on the overall mood of a mix at a particular time. The volume envelope of the mix is calculated by the control system. The generative result of Audio Metaphor reveal the human like creative processes of the system, and is used for assisting sound designers in game sound, sound for animation, and computational arts.

Audio Metaphor is a pipeline of computational tools for generating artificial soundscapes. The pipeline includes modules for audio file search, segmentation and classification, and mixing. The input for the pipeline is a sentence, a desired duration, and curves for pleasantness and eventfulness. Each module can be used independently, or in unison to generate soundscape from a sentence.

Audio Metaphor is a soundscape generation system that transforms text into soundscapes. A user enters a sentence of a scenario, the desired mood, and duration. Audio Metaphor analyzes this text, selects sounds from a database, cuts these sounds up, and recombines them in a sound design process.

The text analysis identifies key semantic indicators used to search for related sounds either locally or online. The algorithm SLiCE attempts to optimize search results for maximizing the combination of keywords in a result. Sounds returned from the search are cut up based on a perceptual model of background and foreground sound. Each classified segment is then run through a predictive model that applies mood based labels to the sound from a two-dimensional affect space. We developed both these models from human listening experiments aimed at automating this process.

A mixing engine takes labelled sound segments and selects, arranges and mixes into the final soundscape. The engine creates separate tracks for semantic groups returned from the search and the mixing engine inserts corresponding sounds onto these, based on the overall mood of a mix at a particular time. The volume envelope of the mix is calculated by the control system. The generative result of Audio Metaphor reveal the human like creative processes of the system, and is used for assisting sound designers in game sound, sound for animation, and computational arts.

Description

http://audiometaphor.ca/

Number of Copies

1

| Library | Accession No | Call No | Copy No | Edition | Location | Availability |

|---|---|---|---|---|---|---|

| Main | 263 | 1 | Yes |